From vol. 111, winter 2015

By Colin Zacharias

Conducting an extended column test. Photo by Colin Zacharias

THE WEIGHT OF EVIDENCE

Every winter day we make snowpack observations and extrapolate from observation sites to nearby terrain. Most days, for most avalanche problems, this extrapolation process works and we make key decisions from comparatively few quality bits of information. But it is easy to lose confidence in our abilities when conditions become unfamiliar or our information becomes scarce.

Outside of current avalanching and other alarm signs, and especially during periods of high snowpack variability, experienced observers tend to steer away from drawing quick conclusions from a few snowpack observations. They recognize that one test is just one observation, and to counter possible extrapolation errors they ensure that over the critical timeframe key information is supported and verified.

On the other hand, inexperienced observers may apply too much importance to a persuasive snowpack test result or a single avalanche occurrence and be subject to a confirmation bias. Experienced forecasters, even with a decent amount of information, recognize that at times their best is still in the end just that.

Karl Klassen, Avalanche Canada Public Avalanche Warning Service Manager and mountains guide, recently reminded me with a nice touch of irony that while our data -> information -> knowledge -> wisdom hierarchy (Zeleny 1987) fits into a neat little package, it can also backfire. Depending on the quality and quantity of the data set, its relevancy, and our ability to interpret the info, data isn’t information and information isn’t knowledge, and if one thing is certain, wisdom is a different kettle of fish.

There are times when logistics make it difficult to add weight to the evidence. Poor weather or difficult travel conditions, for example, may prevent access to terrain or study sites. Yet even then assumptions are made and conclusions derived. As Dr. Bruce Jamieson notes in his mountain snowpack presentation for the ITP Level 2 Module 1, “inaccurate assumptions can have serious consequences” when it comes to spatial variability in the mountain snowpack.

Decisions made from a deficit or even partial deficiency of information required to understand the avalanche problem are considered uncertain in light of an applied risk management strategy (as defined by ISO 31000). In the avalanche world we are okay with uncertainty—so long as we know what we don’t know. We understand that as the measure of uncertainty increases so does that long arm of caution when planning to reduce the risk.

In today’s avalanche world in Southern BC and Alberta, professionals rely on a daily information exchange to help manage the complexity of snowpack/terrain variability, to provide a “heads up” early warning system or a nearest neighbor confirmation— “yes, they’re seeing what we’re seeing.” Each day we scan through thousands of bits of data and information on the InfoEx, then go into the field and gather more, aggregate the data into information packets, and analyze and communicate patterns that we refer to as hazard factors.

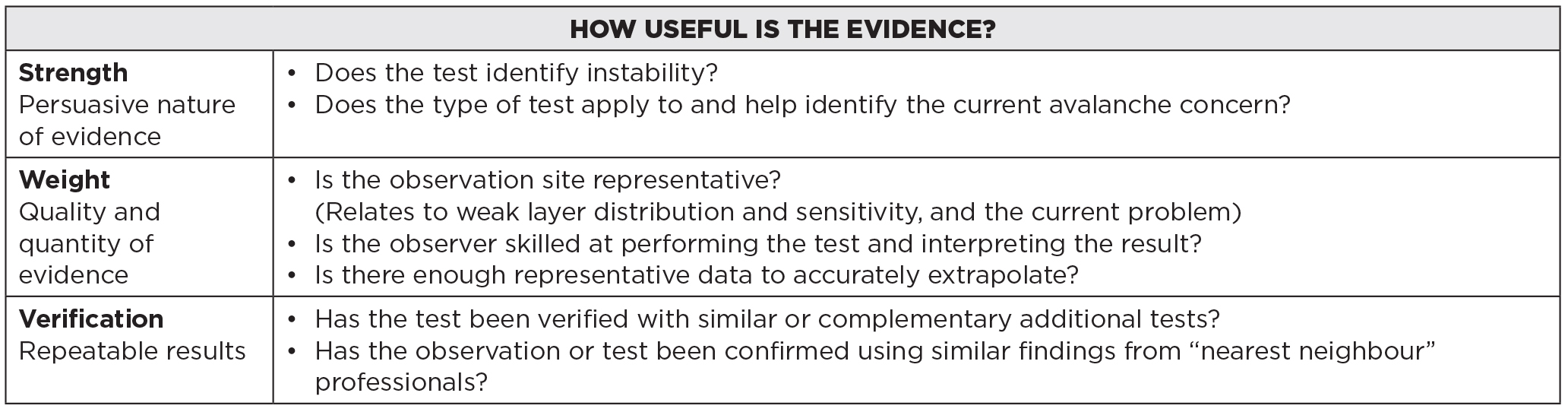

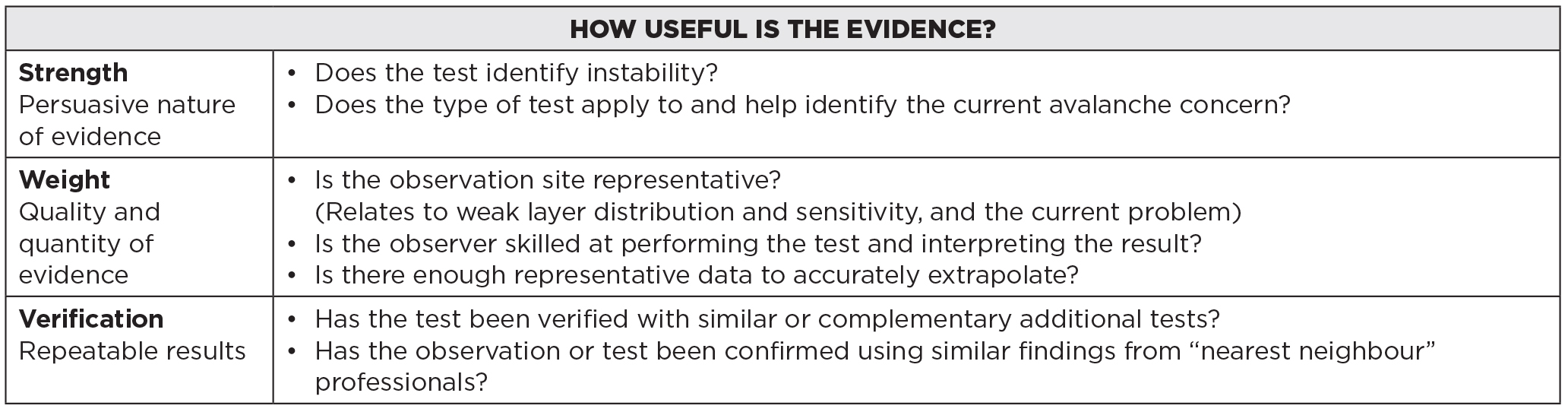

This article, along with the “How Useful Is the Evidence?” table below, was developed in the fall of 2011 as part of an Avalanche Operations Level 2 Module 3 training course handout to help learners apply the notion of strength and weight to field observations, to use a checklist style verification process, and to encourage quality craftsmanship and a thorough approach when analyzing and discussing snowpack factors. It may help the learner to recognize whether or not their evidence drawn from snowpack tests is helpful to their decisions.

Fig. 1: From CAA Level 2 Module 3 handout

CRAFTSMANSHIP AND CONSISTENCY

“Jeez…. the weather and snowpack vary enough; can’t we all just do the same damn observation the same damn way?”

Regional and operational consistency with technique, application and interpretation ensures the quality of data gathered, recorded and communicated. On professional level avalanche training courses, instructors inform that practice, technique, and a meticulous day-to-day consistency with observations, recording and communication should never be undervalued, nor should the scope of the task be underestimated:

- Ensure that there is an objective for each snowpack test. The early morning safety meeting agenda usually includes assessing the day’s avalanche problem and identifying gaps in knowledge. Know what you’re looking for prior to looking.

- Select relevant sites for field test sites using experience and the seasonal observation of how the snow is layered over the terrain. Once sites have proven their worth, they are repeatedly used season to season.

- Conduct tests skillfully using standardized, practiced techniques. Observers use established guidelines when conducting, recording, and communicating weather, snowpack and avalanche observations; these come from Observation Guidelines and Recording Standards for Weather, Snowpack and Avalanches (OGRS) and Snow, Weather, and Avalanches: Observation Guidelines for Avalanche Programs in the United States (SWAG).

- Ensure consistency within an operation by having employees conduct observations side by side. Discuss technique and compare interpretation during preseason staff training.

THE RIGHT TOOL FOR THE RIGHT JOB

The CAA’s OGRS and the AAA’s SWAG provide guidelines for how to conduct and record weather, snowpack, and avalanche observations. Other than a few comments about the observed limitation of certain tests, these guidelines deliberately offer little information on how to apply or interpret the observations as they relate to an avalanche problem or forecast. This knowledge and proficiency is gained through other means, including research articles, professional avalanche training, and on the job training and mentorship.

Of course there isn’t any single test that will reveal exactly what you need to know about snow. Yet every decade or so it seems that guides and forecasters have a new favourite “go to” decision making aid they default to when investigating the current avalanche problem. First it was the Rutschblock test (RB), then the compression test (CT)—or the other way around depending on your region—and now it’s the extended column test (ECT). In a helpful 2010 article “Which Obs for Which Avalanche Type?” Bruce Jamieson and others conducted a field study that did an excellent job of directing attention to those observations that best identify each avalanche concern. The combination of determining the avalanche problem prior to departure (Atkins 2004) and having a good idea about which field observations and tests will best identify the problem is a good start when choosing the right tool for the right problem.

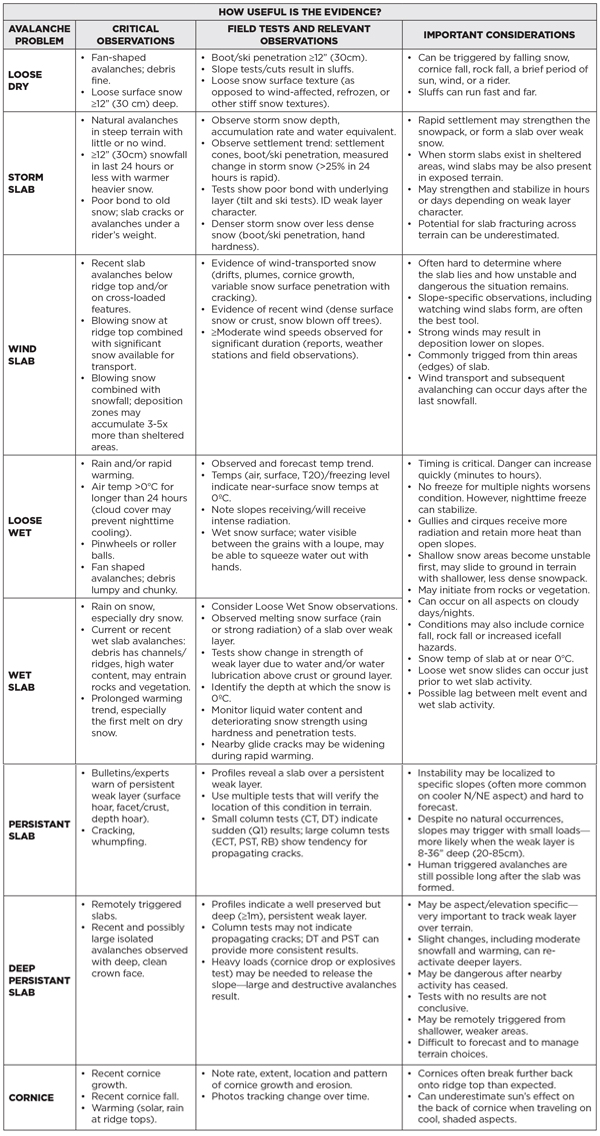

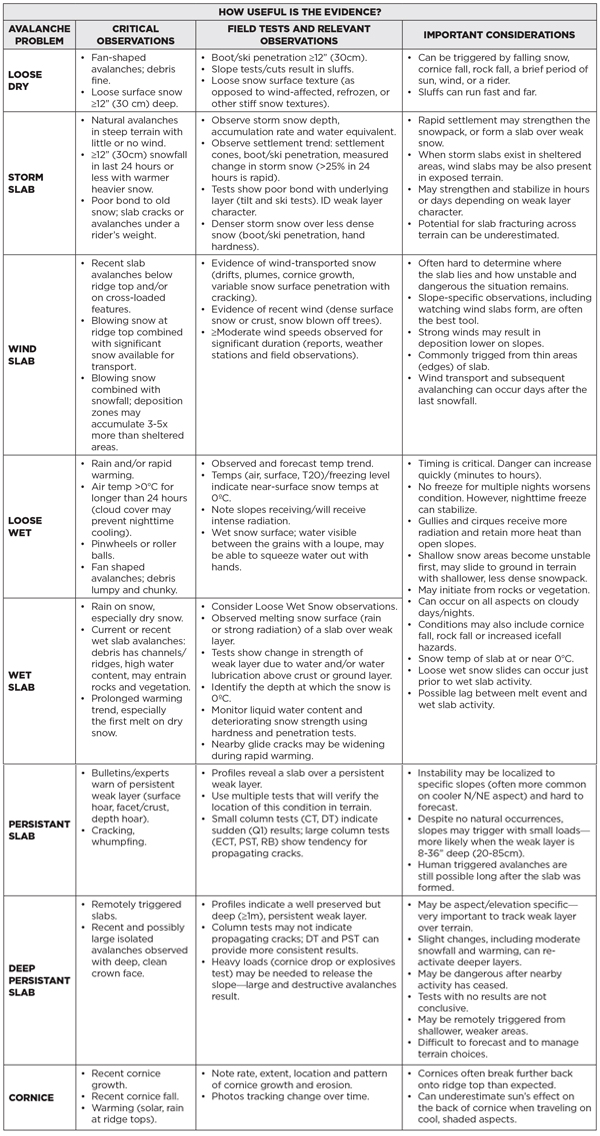

The AIARE Avalanches and Observations Reference included below (published in the AIARE Field Book and instructor materials) was inspired by the aforementioned article and is a useful field reference to help learners target those concerns described in the daily avalanche advisory.

Fig. 2: From AIARE instructor materials and field book, 2012

MANAGING FALSE STABLE AND FALSE UNSTABLE RESULTS

Doug Chabot, forecaster at the Gallatin National Forest Avalanche Center, brings up a good point in a recent blog post: “Snowpit tests are used to show instability, not stability. Never stability. Snow pits (and snowpack tests) do not give the green light to ski; they just give us the red light to not ski. An unstable test result is always critical information. A stable test result does not mean the snow is stable a hundred feet away.”

Chabot’s advice points to the quandary many backcountry recreationists face when analyzing snowpack factors: a test result illustrating unstable snow urges cautious risk reduction, but what does a “no result” mean? Yet estimating where the snow is strong and where the snow is weak is an important skill—particularly for guides committing clients to terrain. Determining stability or the “likelihood that avalanches will not occur” involves a detailed process of gathering evidence, drawing a big picture perspective and not leaping to conclusions from a single observation or test result.

- Knowing the sites that information is coming from, having a systematic or “toolbox approach” to clue gathering (see Fig. 3), and observing the terrain and trends over time are all crucial links in the chain of gathering information and applying it to a hazard analysis. And knowing to what degree those links are missing and then defining the information deficit (whether the uncertainty is weak layer location and distribution, character and sensitivity, or slab characteristic and estimation of destructive potential) is all part of guide and forecaster daily discussion. In addition to the strength, weight and verification checklist provided in Fig. 1, the following points may help when interpreting the day’s investigations.

- A seasonal perspective of where the terrain has historically formed stronger and weaker snow is important. Basal facet development tends to repeat itself in seasonal trends. While near-surface persistent weak layers tend to have a broader distribution, sun or wind effect can result in feature scale variability in weak layer character. For example, DF (decomposed and fragmented snow grain) layers can be unstable locally but may not be problematic on a drainage scale. Expect a higher incident of false stable test results when observing locally unstable layers like DFs, graupel or sun crust/DF interfaces.

- One of the best tools for determining the nature of snowpack variability is to simply observe and memorize how the current snow surface or near surface condition changes over the terrain. Knowing the extent of surface hoar, facet, crust, or graupel formation and the distribution of storm snow and wind redistribution of snow helps to form a baseline when later estimating snowpack strength. Imagine yourself a heli ski guide with the opportunity to travel over 10s or 100s of kilometres of terrain on any given day. Using your eyes, your skis, and a few quick penetration and hand tests provides insight into what to expect when the snow surface becomes a buried weak layer. “Quick tests,” while not subject to the same formal research as standard tests, still provide helpful information to an approximate depth of 45cm (Schweizer and Jamieson 2010).

- A checklist sum of snowpack structural properties (a.k.a. “yellow flags” or the Snowprofile Checklist (Jamieson and Schweizer 2005)) provide valuable clues about which layer interface is most likely to result in a localized failure/fracture. However, as the checklist sum has a tendency to overestimate instability (false unstable=false alarm), further tests are conducted to determine propensity for propagation (Winkler and Schweitzer 2008). The combination of CTs (with fracture character) and the profile checklist sums provide an excellent tool to determine which layer is worth testing prior to a propagation saw test (PST) or ECT propagation propensity test.

- The large column snowpack tests that employ taps or jumps to apply a load to the slab (e.g., the ECT and RB) may still indicate a “no result” when a significant weak layer is buried approximately 1m or deeper—and/or when stiffer snowpack layer characteristics (e.g., a crust) reduce the likelihood that surface taps are affecting the deeper weak layer. The cautionary note is that skier triggering of a layer of this depth may still occur from shallower or weaker area (see case history below). In this scenario, one would not use the ECT or RB as the sole observation tool. It may be more prudent to identify the deeper weak layer with a CT or deep tap test (DT) and if a sudden fracture is observed choose to conduct a PST (or choose a shallower location for an ECT) to observe propensity for crack propagation in the layer. The combination of the small column test (which may err on false unstable but identifies fracture character) combined with a large column test (testing for propagation propensity) both reduces the likelihood of a missed observation and provides more information with a verified result. This “toolbox approach” may help interpret a potential “no result” or a false stable result.

The 2010 Schweizer and Jamieson article “Snowpack Tests for Assessing Snow-Slope Instability” provides an updated, excellent perspective directed at a general audience on snowpack test use and limitations. The following summary points have been paraphrased from the article:

- A good test method should predict stable and unstable scenarios equally well.

- Column tests are particularly helpful for assessing persistent slab conditions.

- Small column tests (CT and DT test) are useful for identifying weak layers and likelihood of initiation but have a tendency to overestimate instability (false unstable) conditions. Observing fracture character improves, to a degree, the interpretation of the test results. These tests are a better indicator of layer character than instability.

- Large column tests are better at predicting propensity for fracture propagation than small column tests, particularly when used in combination with other large column tests. Comparative studies suggest that the RB, ECT, and PST have comparable accuracy.

- With large column tests, repeated test results in the same location are useful but the tests repeated on similar, nearby slopes add value.

- Each test has a margin of error. Even with very experienced observers an error rate of 5-10% is to be expected. Site selection and interpretation require experience.

A TOOLBOX APPROACH TO INVESTIGATING LAYERS OF CONCERN

The Toolbox Approach in Fig. 3 may help students avoid the relatively high number of false predictions that occur due to a combination of several factors, such as extrapolation from single tests and high snowpack variability. The diagram supports a dialogue encouraging students to take a step-by-step approach and observe clues from a combination of tests and observation methods. For example, the combination of both the “yellow flags” checklist sums and fracture character in compression tests provide clues, not confirmation about whether or not a “propagation likely” scenario exists, which is then verified with a large column test that is suitable for testing within limitations posed by the particular snowpack structural properties. Understanding test limitations, matching the test to observed structural properties and verifying observations with complementary tests may improve the ability to interpret the test results and reduce false stable or false unstable predictions. I created this diagram and instructional method five years ago and have included it on the L2M3 and American professional level courses.

FIG. 3 The toolbox approach, ver. 6, Zacharias, 2015

A CAUTIONARY TALE

Backcountry winter travelers are always encouraged to make weather and snowpack observations in the field, and when possible identify on a drainage and slope scale what the public avalanche advisory describes for the region or range. For the most part, this is an effective risk management strategy. However, there have been a number of close calls, incidents and avalanche accidents with backcountry users increase their risk by not managing exposure when gathering information or misinterpreting the observations they collect. In December 2007, a fatal avalanche accident occurred on Tent Ridge in Kananaskis Country when two backcountry skiers were killed conducting a snow profile in the start zone of an avalanche path. Older examples of riders conducting tests on or very near the slope and being subsequently killed include Wawa Bowl, AB, and Mt. Neptune, BC in 1984, Thunder River, BC in 1987, and White Creek, BC in 1993. More recent incidents include Ningunsaw Pass, BC in 1999 and in Twin Lakes, CO in 2014, where a group of seven dug a profile and conducted eight CTs on a slope before choosing to ski it (see CAIC Incident Report for more information).

There are also several examples of “close calls” where test results gathered and extrapolated to chosen terrain illustrated one problem but not the primary concern. A recent example occurred in December 2013 in Hope Creek, BC involving two backcountry skiers. This is an unfortunate example where a combination of well-intentioned observations formulated a confirmation bias and decision making trap. The rider’s observations prior to descending the slope included three existing ski tracks on the slope, 15cm recent snow, light winds, -3°C and no recent avalanches. The group conducted several tests with the following results: CTM16 (SC), ECTP 23, and “numerous ski cuts in the start zone,” all revealing a significant surface hoar layer (size 7-10mm) 40cm deep but nothing deeper. A DT also revealed no results on deeper layers. The group decided that the surface hoar layer was manageable and to ski the slope one at a time. Rider 1 skied the slope with no problems and stopped 400m below, adjacent to the path trim line. Unfortunately, Rider 2 triggered the slope after landing an air low down in the start zone. The resulting D3 avalanche fractured 100m wide on basal facets 80-120cm deep and well below the surface hoar layer. The fast moving avalanche debris caught Rider 1 on the path’s edge before he could scramble to safety. Both involved were carried approximately 700m downslope. Both were buried and badly injured but were able to self extricate, call for help, and were successfully rescued

(Editor’s Note: read a first-hand account of this avalanche by Billy Neilson in The Avalanche Journal Volume 106).

Those involved generously provided the CAA occurrence report with snowpack observations and insight into what gave them confidence to venture onto this particular slope. This event is a helpful wake-up call as we can all place ourselves in their decision making shoes. In hindsight, it is revealing to examine the Kicking Horse Mountain Resort local forecaster’s public video statement issued on Vimeo on December 13, 2013 for the nearby backcountry terrain. The forecaster warned there is a “basal weakness at the bottom of the snowpack that is still reactive,” and “skier triggered size 3 avalanches have occurred,” and “avalanches had triggered larger slopes sympathetically,” and that “now is the time to be very mindful of slope history.” He went on to emphasize “without that degree of confidence that an avalanche has happened [on your slope of interest], you are really rolling the dice hopping onto big terrain.” This incident—though occurring over one week after the video statement—illustrates that when it comes to managing deeper persistent slabs, the careful observations and good well-learned techniques of the backcountry travelers were not sufficient to protect them from the lingering hazard. It also reveals the big-picture perspective of the forecaster, who clearly warned of the more serious basal concern.

Experience with this type of problem, experience monitoring unstable snow in a shallower snow climate, experience matching specific tests to specific problems, and experience managing false stable results and prioritizing the key concerns are all factors that may have given the experienced forecaster a different perspective than the backcountry riders. In this case, the knowledge of how the snowpack lay over the terrain held more weight than even a series of test results, all of which drew attention to a secondary problem that, while significant, was less so than what lurked below.

The bottom line is snowpack tests used to predict instability, while valuable when employed appropriately, are not foolproof. As Schweizer and Jamieson state obviously and importantly in the aforementioned 2010 article, “decisions about traveling in terrain should not be based solely on stability (snowpack) test results.”

REFERENCES

American Avalanche Association and USDA Forest Service National Avalanche Center. 2010. Snow, Weather and Avalanches: Observation Guidelines for Avalanche Programs in the United States. 2010. Pasoga Springs, CO: AAA. http://www.avalanche.org/research/guidelines/pdf/Introduction.pdf

Atkins, Roger. 2004. “An Avalanche Characterization Checklist for Backcountry Travel Decisions." Proceedings of the 2004 International Snow Science Workshop. Jackson Hole, WY. http://arc.lib.montana.edu/snow-science/objects/issw-2004-462-468.pdf.

Avalanche Canada Incident Report Database. Golden, Hope Creek Draining, Privateer Mountain. December 29, 2013. http://old.avalanche.ca/cac/library/incident-reportdatabase/view.

Campbell, Cam. 2008. “Testing for Initiation and Propagation Propensity.” Canadian Avalanche Association Level 2 Module 3 Lecture.

Canadian Avalanche Association. 2014. Observation Guidelines and Recording Standards for Weather, Snowpack and Avalanches. Revelstoke: CAA. www.avalancheassociation.ca/resource/resmgr/Standards_Docs/OGRS2014web.pdf

Chabot, Doug. “Another viewpoint on a Backcountry Magazine article.” Gallatin National Forest Avalanche Center Blog, November 6, 2015. http://www.mtavalanche.com/blog/another-viewpoint-backcountry-magazine-article

Colorado Avalanche Information Center. Colorado, Star Mountain. February 15, 2015. https://avalanche.state.co.us/caic/acc/acc_report.php?acc_id=526&accfm=inv

Gauthier, Dave, Cameron Ross, and Bruce Jamieson. 2008. “How To: The Propagation Saw Test.” University of Calgary Applied Snow and Avalanche Research. October 2008. http://www.ucalgary.ca/asarc/files/asarc/PstHowTo_Ross_Oct08.pdf.

Jamieson, Bruce. 2009. “Mountain Snowpack and Spatial Variability.” Canadian Avalanche Association Level 2 Module 1 Lecture.

Jamieson, Bruce. 2003. “Risk Management for the Spatial Variable Snowpack.” Avalanche News 66 (Fall 2003): 30-31.

Jamieson, Bruce and Jürg Schweizer. 2005. “Using a Checklist to Assess Manual Snow Profiles.”

Avalanche News 72 (Spring 2005): 57-61.

Jamieson, Bruce and Torsten Geldsetzer. 1996. Avalanche Accidents in Canada Volume 4: 1984-1996. Revelstoke: Canadian Avalanche Association.

Jamieson, Bruce, Jürg Schweizer, Grant Statham and Pascal Haegeli. 2010. “Which Obs for Which Avalanche Type?” Proceedings of the 2010 International Snow Science Workshop. Squaw Valley, CA. http://arc.lib.montana.edu/snowscience/objects/ISSW_O-029.pdf

Klassen, Karl. 2002. American Institute for Avalanche Research and Education Level 2 Student Manual. 2002 Version.

Ross, Cameron, and Bruce Jamieson. 2008. “Comparing Fracture Propagation Tests and Relating Test Results to Snowpack Characteristics.” Proceedings of the 2008 International Snow Science Workshop. Whistler, BC. http://arc.lib.montana.edu/snow-science/objects/P__8177.pdf.

Schweizer, Jürg and J. Bruce Jamieson. 2010. “Snowpack Tests for Assessing Snow-Slope

Instability.” Annals of Glaciology 51(54): 187-194.

Simenhois, Ron and Karl Birkeland. 2007. “An update on the Extended Column Test: New Recording Standards and Additional Data Analyses.” The Avalanche Review 26(2).

Zeleny, Milan. 1987. “‘Management Support Systems: Towards Integrated Knowledge Management.” Human Systems Management 7: 59–70.

“Skiers Died Testing for Avalanche.” The Calgary Herald, December 11, 2007. Accessed December 10, 2015. http://www.canada.com/story.html?id=75d72a3c-c743-4c65-a0c2-a18728813217.